In the world of computer architecture, cache memory plays an important role in speeding up data access. But to use the cache efficiently, we need a method to place memory data into the cache and one of the simple and easy methods is called as the Direct Mapping Process.

The easiest method used for mapping is known as direct mapping. In direct mapping, the maps every block of the main memory into only a single possible cache line. In the case of direct mapping, we assign every memory block to a certain line in the cache.

What is Cache Memory?

Before we understand the definition of Direct Mapping, we need to understand the cache memory.

Cache memory is a small, high speed storage which is located close to the Central Processing Unit (CPU). It stores frequently accessed data and instructions so that the processor can quickly recover them, reducing the need to access slower main memory. As the cache memory is limited, we need a mapping technique to decide where to place the data.

What is Direct Mapping?

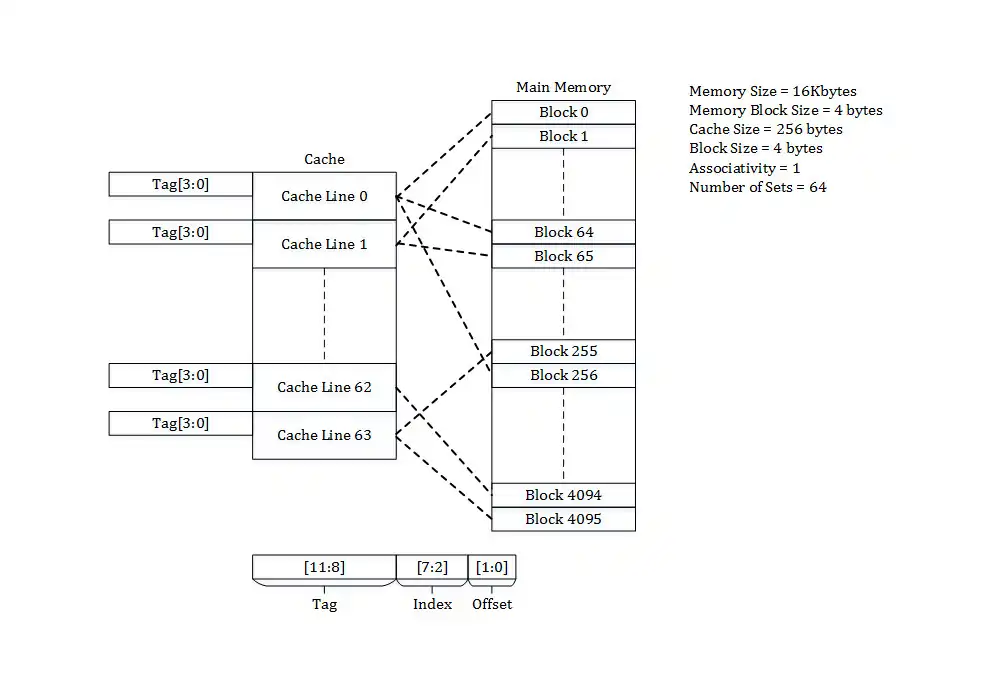

Direct Mapping is the easiest, simplest and fastest method to map data from the main memory to the cache memory. It is commonly used cache mapping process where each block of main memory is mapped to exactly one location in the cache called cache line.

Direct mapping is a technique in which it is used to assign each memory block in the main memory to a particular line in the cache. If a line is already filled with a memory block and a new block needs to be loaded, then the old block is thrown away from the cache.

The position is calculated using a simple formula:

Cache Line Number = (Block Number) MOD (Number of Cache Lines)

Example of Direct Mapping:

In example of direct mapping cache memory in computer architecture, if we have 16 blocks in main memory and 4 lines in cache.

Now, if we want to store Block 10, we use the formula: 10 MOD 4 = 2

So, Block 10 will be stored in cache line 2.

Now if we try to store Block 6: 6 MOD 4 = 2

It also maps to cache line 2. So, it will replace Block 10. This is called a cache collision.

Advantages of Direct Mapping:

The advantages of Direct Mapping Process in Computer Architecture are as follows,

- The algorithm is easy to implement and understand.

- Direct mapping requires very few comparators and less complex circuitry as compared to associative mapping.

- Direct mapping is easier to debug and analyze cache performance.

- It consumes less power and beneficial for the low power devices.

- Direct mapping is affordable to implement as compared to more complex mapping techniques.

Disadvantages of Direct Mapping:

The disadvantages of Direct Mapping Process in Computer Architecture are as follows,

- Direct mapping may cause frequent overwriting and cache misses.

- Direct mapping can significantly degrade the system performance due to repeated cache misses.

- Continuous switching of data can result in high replacement frequency and reducing speed.

- It is hard to adapt to varying memory access patterns.

- Whenever the system is turned off, data and instructions stored in cache memory get destroyed.

The Direct Mapping Process in Computer Architecture is a basic but one of the important and powerful technique to map main memory into cache memory. It is an easy and simple solution for many computer systems. Understanding how it works helps you appreciate how your computer handles data behind the scenes!